Openai is moving to publish the results of his internal assessments of model security he is more regularly in what dress is saying is an attempt to increase transparency.

On Wednesday, Openai started hub security ratings, a website showing how the company’s models record in various tests for generating harmful content, jailbreaks and hallucinations. Openai says he will use the center to divide the metric into “continuous bases” and aims to update the center with the “main model updates” going forward.

“As the science of evolving evolving, we aim to share our progress in developing the most escalating ways to measure the ability and safety of the model,” Openai wrote in a blog post. “Sharing a subset of our security evaluation results here, we hope that this will not only make it easier to understand the performance of Openai systems over time, but also support community efforts – to increase transparency throughout the field.”

Openai says he can add additional assessments to the center over time.

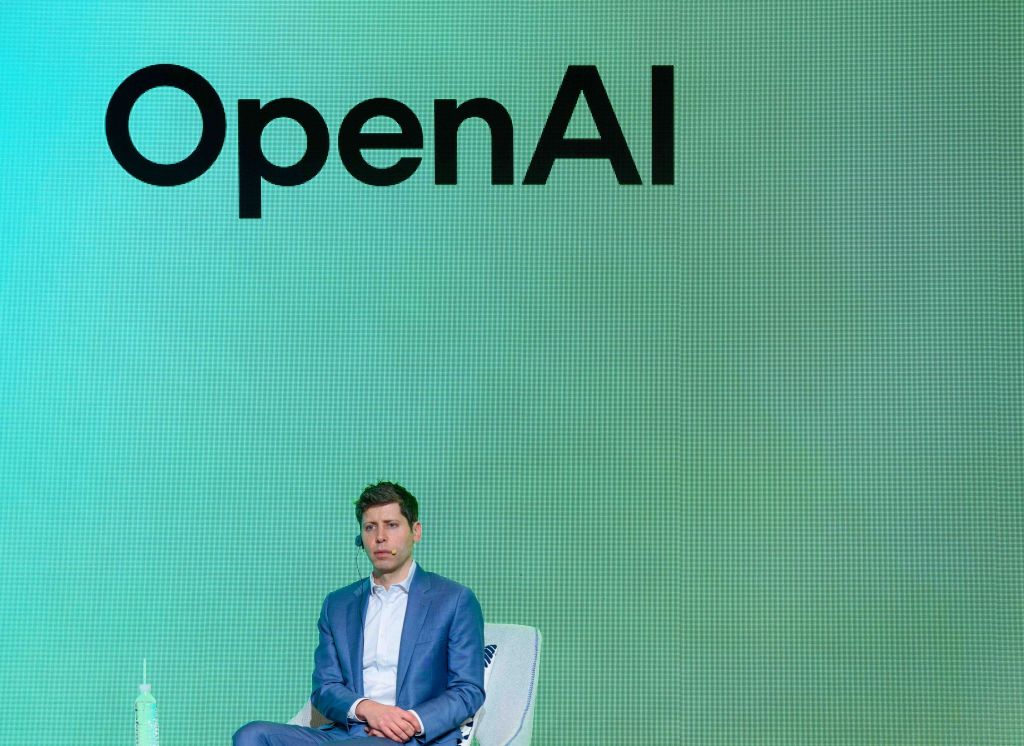

In recent months, Openai has raised the anger of some reporting ethics that he has hurried testing the security of certain flag models and failure to release technical reports to others. The company’s general manager, Sam Altman, is also accused of fraudulent Openai leaders about the model’s security reviews before his short crash in November 2023.

At the end of last month, Openai was forced to return an updating of the predetermined model that strengthened chatgpt, GPT-4o, as users began to report that he responded in a very valuable and acceptable way. X was flooded with chatgt pictures by applauding all kinds of problematic, dangerous decisions and ideas.

Openai said it would implement some adjustments and changes to prevent such incidents in the future, including presenting an “alpha phase” of choice for some models that would allow certain chatgt users to test the patterns and give the feedback before starting.