Pruna he, a European startup who has worked in compression algorithms for his models, is making his own optimism framework on Thursday.

Pruna has created a frame that applies several methods of efficiency, such as caching, pruning, quantization and distillation, in a certain model.

“We also standardize the storage and loading of compressed models, applying combinations of these compression methods, and also evaluating your compressed model after compressing it,” he told AI co-Fonder and Cto John Rachwan for Techcrunch.

In particular, the pruna framework it can evaluate if there is a significant loss of quality after compression of a model and the performance profits you get.

“If I were to use a metaphor, we are similar to how to embrace the face of standardized transformers and distributors – how to call them, how to save them, load them, etc. We are doing the same, but for the methods of efficiency,” he added.

The large laboratories of it have already used different compression methods already. For example, Openai is based on distillation to create faster versions of his flag models.

This is likely how Openai developed the GPT-4 Turbo, a faster version of the GPT-4. Similarly, the flow pattern.1-Schnell Image Generation is a distilled version of the flow pattern.1 by Black Forest Labs.

Distillation is a technique used to draw knowledge from a large model with a “teacher-student” model. Developers send requests to a teacher model and record the results. The answers are sometimes compared to a database to see how accurate they are. These results are then used to train the student model, which is trained to approximate the teacher’s behavior.

“For big companies, what they usually do is for them to build these things at home. And what you can find in the open source world is usually based on single methods. For example, let’s say a quantization method for LLM, or a caching method for diffusion patterns,” Rachwan said. “But you can’t find a tool that accumulates everyone, makes them all easy to use and combine together. And that’s the great value that pruna is bringing now.”

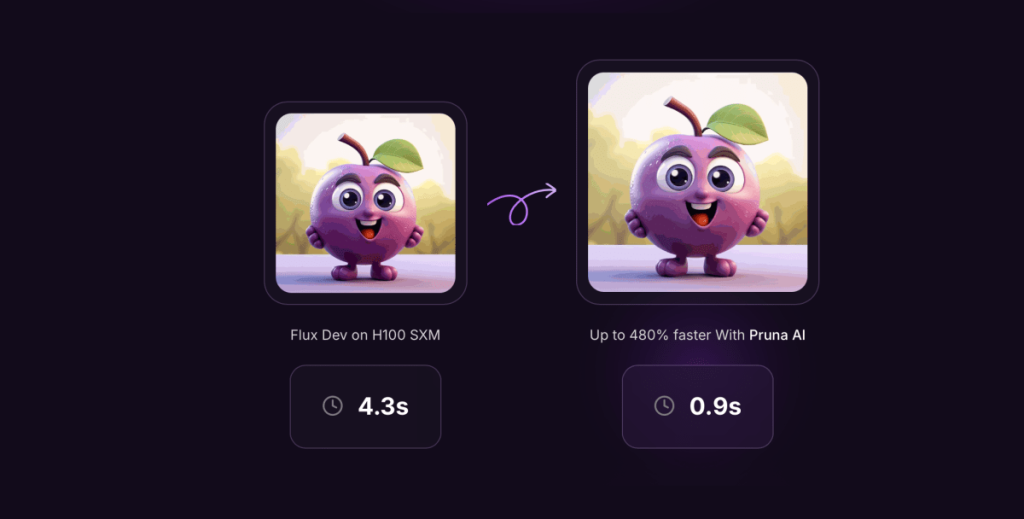

While Pruna he supports every type of model, from large language models to diffusion patterns, text -speaking patterns and computer vision patterns, the company is focusing more specifically on image and video generation patterns now.

Some of the existing pruna users it includes the script and the photoroomin. In addition to publishing open source, Pruna has an enterprise that offers advanced features of optimism including an optimization agent.

“The most exciting features we are leaving soon will be a compression agent,” Rachwan said. “Basically, you give your model, you say,” I want more speed, but don’t remove my accuracy with more than 2%. “And then, the agent will simply do his magic.

Pruna he accuses with the clock of her version in favor. “Sim similar to how you would think of a GPU when you rent a GPU in AWS or any Cloud service,” Rachwan said.

And if your model is a critical part of your infrastructure of it, you will end up saving a lot of money for conclusions with the optimized model. For example, Pruna has made a Llama model eight times smaller without much loss using its compression frame. Pruna he hopes her clients will think about her compression framework as an investment she pays for herself.

Pruna he set up a $ 6.5 million round of funds a few months ago. Investors initially include EQT Ventures, Daphni, Motier Ventures and Kim Ventures.